Olga Mykhoparkina

Founder, CEO

Olga Mykhoparkina

May 21, 2025AI recommendations are getting smarter – but how?

You’ve probably noticed it. AI systems are getting better at suggesting what content to write, what products to show, or which leads to chase. Everything feels a bit more personal.

But most marketers have no idea why that’s happening. It’s not just better algorithms or more training data quality—it’s something called RAG systems, short for retrieval augmented generation.

The name sounds like something out of a research paper, but the concept is both simple and game-changing if you want to understand how artificial intelligence works behind the scenes and how your content fits into it.

Most large language models work like this: they’ve read a massive amount of content during training, and when you ask them something, they predict the best answer based on what they remember. The problem? They don’t actually know if what they’re saying is still accurate—or even real.

That’s where RAG models come in.

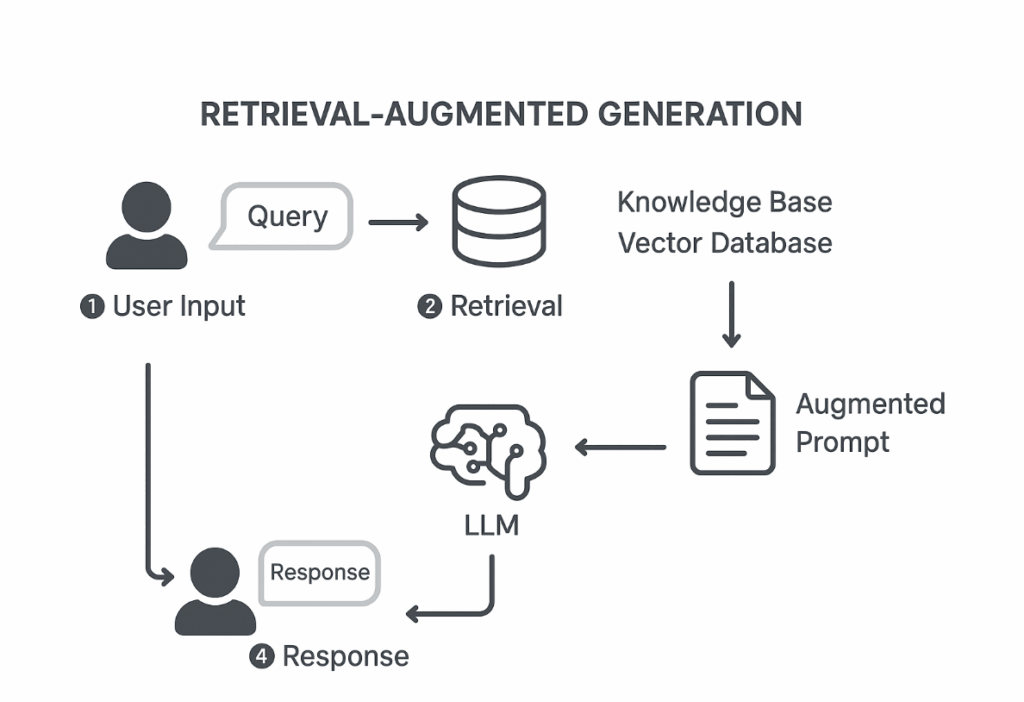

When someone asks a question, RAG systems first retrieve relevant information from leveraging external data sources—such as your internal docs, medical literature, or clinical guidelines—to ensure the answer contains up to date information. Then, the system blends this retrieved data with its existing knowledge to create contextually relevant responses.

Read also: From Blog Posts to Answers: Repurposing for AI Assistants

Traditional search just gives you a list of links. RAG models extract and process the content behind those links, then weave it into a coherent, useful reply.

Your content can directly influence AI systems’ recommendations—not just search rankings. In RAG workflows, evaluation metrics typically include retrieval accuracy, data freshness, and overall quality. That means your documentation, product pages, or whitepapers can become part of an AI system’s decision-making engine.

For example, in healthcare, RAG systems can pull from medical literature and clinical guidelines to ensure that doctors receive relevant information grounded in evidence. In marketing, large language models using RAG can recommend strategies based on your latest content instead of outdated insights.

One of the biggest issues in artificial intelligence is “hallucination,” where models generate confident but wrong answers. RAG systems reduce this risk by pulling in up to date information before responding, improving both reliability and trust.

That means the recommendations you get are:

You might be interested in: 10 Content Formats That Get Picked Up by LLMs

In RAG-based AI development, performance is measured using metrics such as retrieval accuracy, data completeness, and the quality of contextually relevant responses. These benchmarks ensure that RAG models not only work in theory but actually help users make better decisions.

Whether you’re working with large language models in marketing or using RAG for medical research, RAG systems give AI systems the ability to retrieve relevant information from trusted sources and provide fact-checked answers. By combining stored knowledge with fresh up to date information, they bridge the gap between static training and real-world, dynamic content—delivering results that users can trust.

| Feature | Traditional recommendation engines | RAG (Retrieval-augmented generation) |

| How it works | Uses historical data and patterns (like clicks or past behavior) | Looks up real-time content before generating a response |

| Data source | Static – based on what’s already stored or trained | Dynamic – pulls from up-to-date content like docs, blogs, or databases |

| Accuracy | Can be hit-or-miss, especially with new or niche topics | More accurate – references actual info before suggesting anything |

| Context awareness | Limited – relies on user behavior, not content context | High – understands user intent and content context |

| Content usage | Doesn’t actually read your content – just tracks performance | Actively reads and uses your content to generate suggestions |

| Best for | Recommending products, upsells, or content based on past activity | Giving smarter, real-time answers or suggestions based on content |

Related: Schema & Structured Data for LLM Visibility: What Actually Helps?

RAG isn’t just some abstract tech. You’ll start noticing it in the AI models and platforms you already use. Here’s where it shows up in real life:

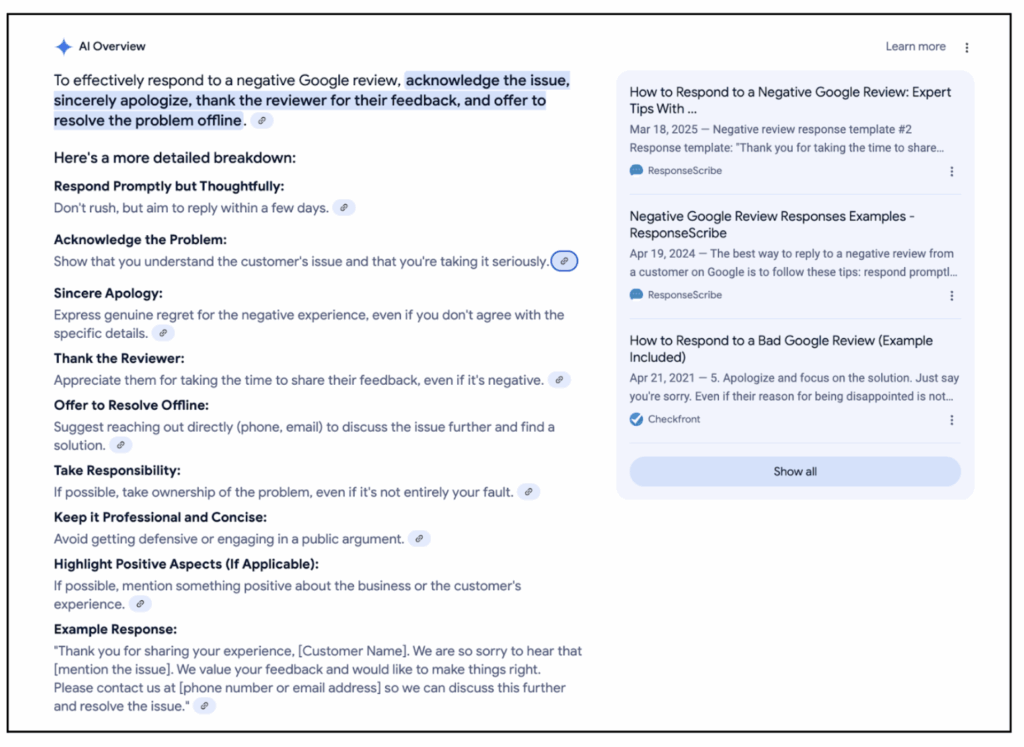

And here’s where it gets interesting for SEO folks: when search engines or generative models start surfacing your content, RAG decides what’s worth showing. If your content is clear, domain specific knowledge-rich, and easy to reference, you stand a better chance of being in those AI-generated answers.

Read: Does Reddit influence LLMs responses? (The 2025 Research)

Here’s the new reality for SEO: generative models powered by RAG systems serve answers from structured data and retrieved data, not just whatever ranks on page one. To make it into the “retrieval pool,” your content must be optimized for real time data retrieval and underlying data clarity.

That means:

These elements feed into evaluation metrics that AI systems use to measure answer quality—ranging from human evaluation to automated scoring of generated responses.

One key advantage of RAG is how it merges training data with leveraging external data sources. For instance, a healthcare assistant can combine clinical guidelines and scientific literature with patient-specific inputs to generate contextually relevant recommendations. In marketing, RAG can pull relevant structured data and usage patterns to create generated responses adapted to the moment.

This blend ensures:

Related: Structuring Web Pages for AI-First Indexing

You don’t need to throw away your entire strategy, but with artificial intelligence moving toward generative models and real time data integration, it’s time to make your pages easier for AI to scan and reference.

That means:

The future of visibility isn’t just ranking in search engines—it’s being part of generative models’ answers. And that’s where evaluation metrics meet quality content.

Want expert help getting your SaaS content picked up by LLMs? Here are the best AI marketing agencies for SaaS brands

RAG is more than just a tech buzzword. It’s a real upgrade in how RAG-powered tools pull and present information by using a retrieval component and a generation process. For marketers, it’s a clear sign that traditional generative models are being replaced by RAG-based systems with stronger context awareness and better generation capabilities. This shift is part of a rapidly changing AI landscape, where contextual relevance and response accuracy matter as much as speed.

For SEO folks, this means one thing: double down on quality content that answers user queries with accurate information and accurate responses. Forget tricks or shortcuts. You’re no longer just writing for search engines—you’re creating resources for AI systems that use dense vector retrieval, a semantic search method that finds information by meaning rather than exact keywords.

At Quoleady, we help B2B SaaS brands produce content that AI can find in their knowledge bases, cite as external knowledge, and deliver in AI responses that improve user satisfaction.

Regular AI tools (like a basic chatbot) generate output solely from static datasets and training data, often missing context awareness. RAG, on the other hand, uses a retrieval process to pull external knowledge from a knowledge base or domain specific knowledge bases before starting its generation processes. This improves contextual relevance, improves response accuracy, and strengthens the system’s ability to deliver accurate information.

Because it changes how recommendations get made—from blog ideas to product suggestions and chatbot replies. RAG technology enables AI applications to draw from knowledge bases that reflect your brand’s expertise, which can directly impact customer satisfaction. If your content is part of those sources, you become part of the generation processes that shape AI responses.

See also: LLMs vs SEO: What’s Changing in Search Discovery

Yes. RAG performance in ranking and retrieval depends on retrieval accuracy, system’s ability to understand user queries, and the depth of your knowledge base. When rag based systems pull external knowledge from your content, they feed it into generation processes that produce accurate responses—meaning your visibility is tied to being part of trusted domain specific knowledge bases.

Not radically, but you should make your content easier to retrieve. That means structuring it for parallel processing and semantic search, writing with context awareness limited only by the retrieval process and knowledge base size, and ensuring accurate responses backed by reliable sources. For healthcare and legal sectors, this may include integrating patient data or relevant case law while respecting data privacy—a critical challenge in the rapidly evolving landscape of AI.

Absolutely. Smaller brands with focused knowledge bases can see faster widespread adoption of their content in AI generated responses, especially if they create high-quality, contextually relevant material that RAG technology can easily retrieve. The key is aligning with how traditional generative models are being replaced by retrieval-driven generation processes that serve accurate responses to niche user queries.

Let us know what you are looking to accomplish.

We’ll give you a clear direction of how to get there.

All consultations are free 🔥