Simran Bharti

Content Manager

Simran Bharti

Jul 15, 2025Search is evolving again.

For years, G2 and Capterra were key platforms for SaaS discovery. Comparing software tools meant visiting review platforms, sorting by ratings, and weighing user feedback.

But the discovery process has changed. Increasingly, buyers now turn to ChatGPT, Claude, Gemini, or Perplexity to find and evaluate SaaS tools directly – skipping the search results and review aggregators.

That shift raises an important question:

We tested it.

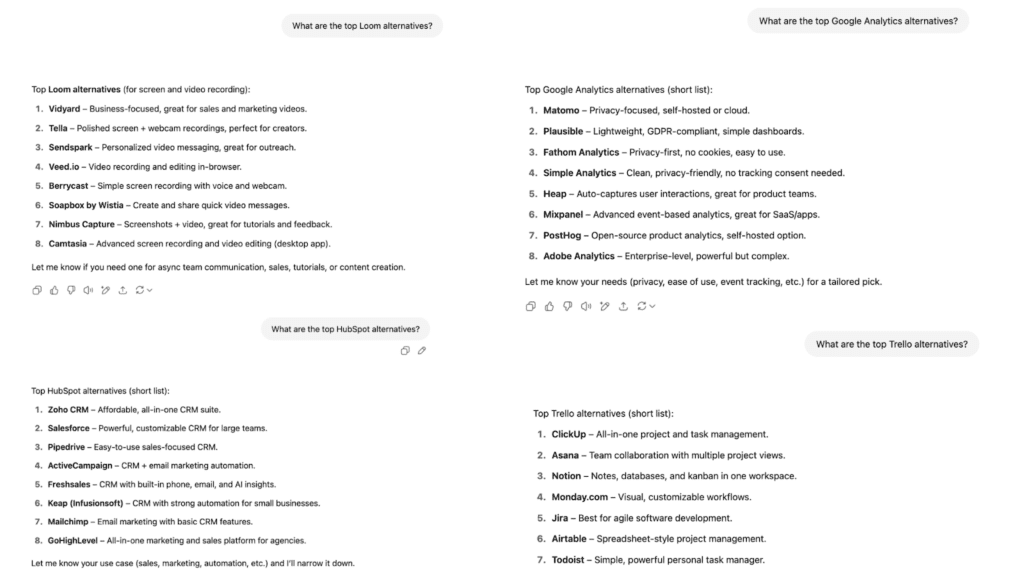

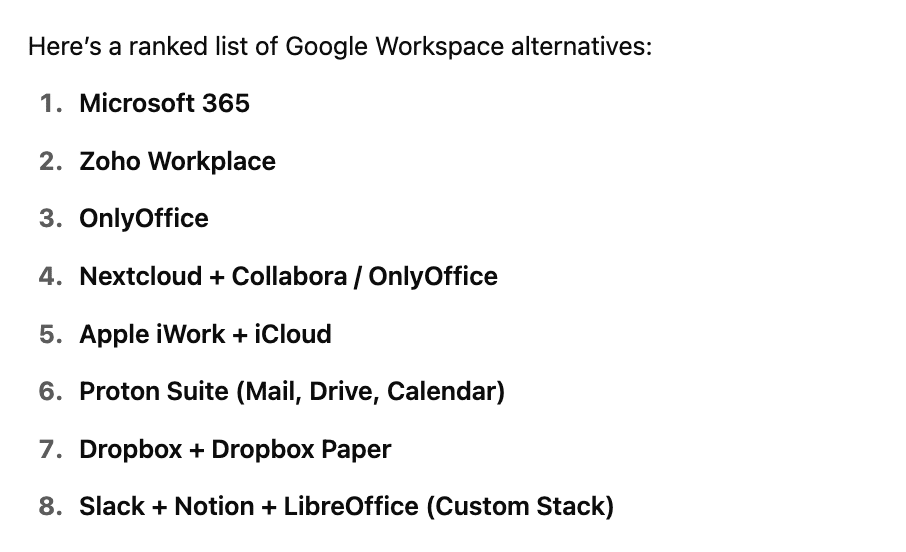

We picked dozens of high-intent SaaS “alternatives” keywords like:

Then we asked ChatGPT for its recommended alternatives for each keyword.

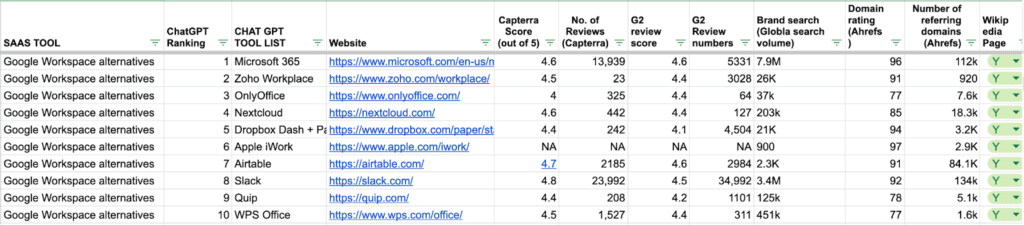

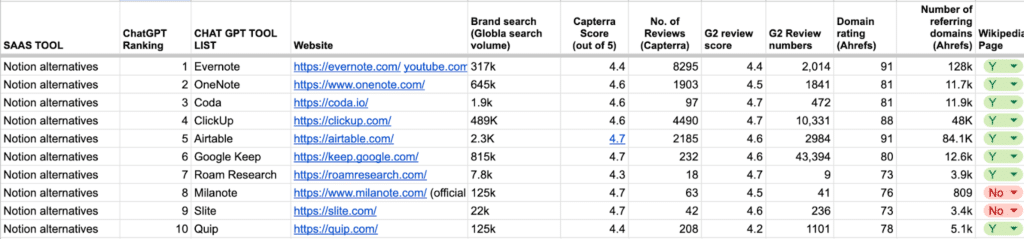

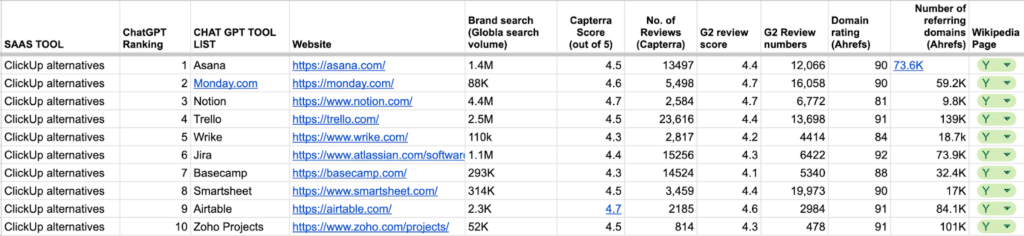

For every tool that showed up in the results, we collected:

After collecting the data, we set out to understand how much weight review platforms carry in influencing ChatGPT responses.

After analyzing the data, we found that 100% of the tools mentioned in ChatGPT answers had reviews on Capterra, 99% had reviews on G2, and 78.8% had a Wikipedia page. This shows that these platforms serve as a basic inclusion signal – if your tool isn’t listed on them, you’re likely to be excluded altogether.

But visibility alone doesn’t determine placement. Review platforms help LLMs recognize a tool’s legitimacy by providing structured and frequently updated information.

However, our research shows that even with strong review presence, some tools ranked lower than those with far fewer reviews, weaker ratings, or less brand visibility.

Example:

This suggests that while G2 and Capterra reviews help establish trust, they aren’t the primary criteria for ranking.

Takeaway: Yes, G2 and Capterra reviews do influence ChatGPT results, but only at the inclusion level. Review platform presence is almost always required to show up.

But it’s not enough to rank high. If you want to show up in ChatGPT results, being on review sites is the starting point, not the strategy.

Once we established that review platforms help with inclusion, we dug deeper: does having more reviews actually boost your position in ChatGPT’s rankings?

The data shows only a weak connection between the number of reviews and a tool’s placement:

Some tools with just a few hundred reviews outranked tools with tens of thousands.

Example: Coda, with just 97 reviews on Capterra and 472 on G2, ranked #3 for Notion alternatives. In contrast, ClickUp had 4,490 reviews on Capterra and 10,331 on G2 but ranked lower at #4. This reinforces the insight that review volume does not directly determine position in LLM answers.

Takeaway: Weak correlation between review count and ChatGPT rank. More reviews give a small edge, but don’t guarantee top visibility. In many cases, LLMs seemed to prioritize contextual relevance over raw quantity.

After reviewing review counts, we asked: do higher average scores help tools rank higher?

We checked whether tools with better G2/Capterra scores ranked higher.

These values are close to zero, suggesting average review scores have little to no impact on tool position in LLM answers.

Example:

Conclusion: Ratings help, but don’t significantly influence rank. Tools with low scores were still included, indicating that review quality isn’t a strong ranking factor.

Brand popularity is often assumed to correlate with visibility. We explored whether tools with high global search volumes

We analyzed whether tools with higher search volumes consistently ranked better in ChatGPT answers. The result we got showed a weak correlation of –0.20.

Example: For ClickUp Alternatives:

Example: Monday.com, with a brand search volume of just 88K, ranked #2 for ClickUp alternatives. In contrast, Trello (2.5M), Notion (4.4M), and Jira (1.1M) – all significantly more searched – were ranked lower. This emphasizes that brand visibility isn’t a strong predictor of placement.

While brand popularity may help a tool get included in LLM-generated answers, it doesn’t determine where it will rank. In our findings, lower-profile tools often outranked those with millions in brand search volume.This suggests LLMs prioritize topical relevance and authoritative context over sheer name recognition.

Takeaway: Brand popularity helps, but low-visibility tools still outrank major names.

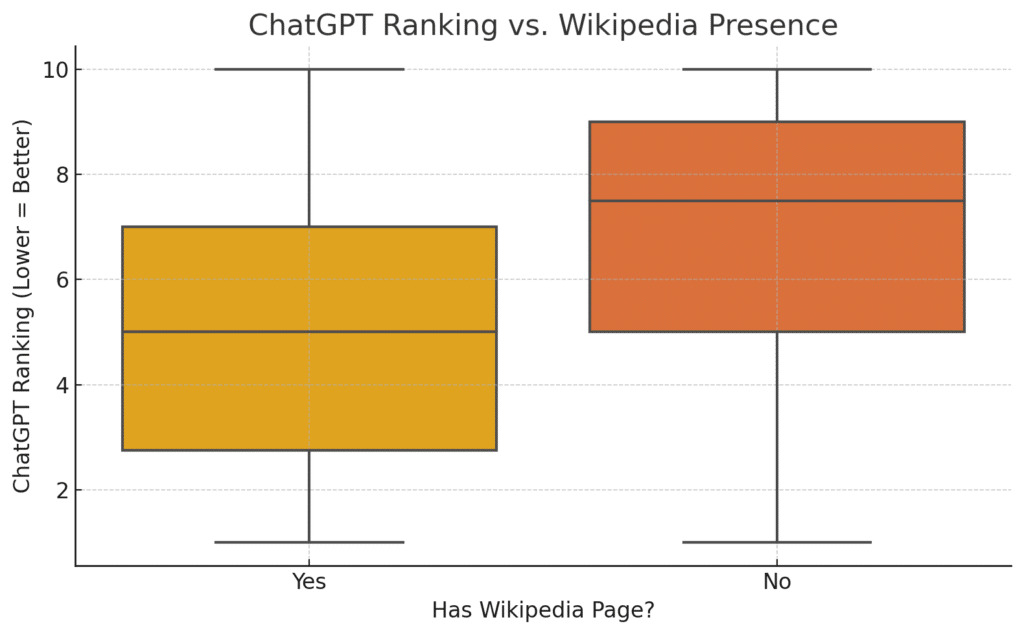

We also tested whether having a structured, credible presence on Wikipedia influenced tool rankings. Wikipedia is one of the most cited sources on the web, often referenced directly or indirectly in LLM training data.

As per our data, 78.8% of listed tools had a Wikipedia page. Tools with a Wikipedia presence had an average rank of 5.07, while those without averaged lower at 6.91. The correlation came out to –0.26, indicating a moderate connection between having a Wikipedia page and higher placement in ChatGPT results.

Takeaway: Having a Wikipedia page moderately improves your chances of ranking better – but isn’t required. It likely supports LLM inclusion by reinforcing brand legitimacy and structured context. In simple words, tools with Wikipedia pages tend to rank higher in ChatGPT answers.

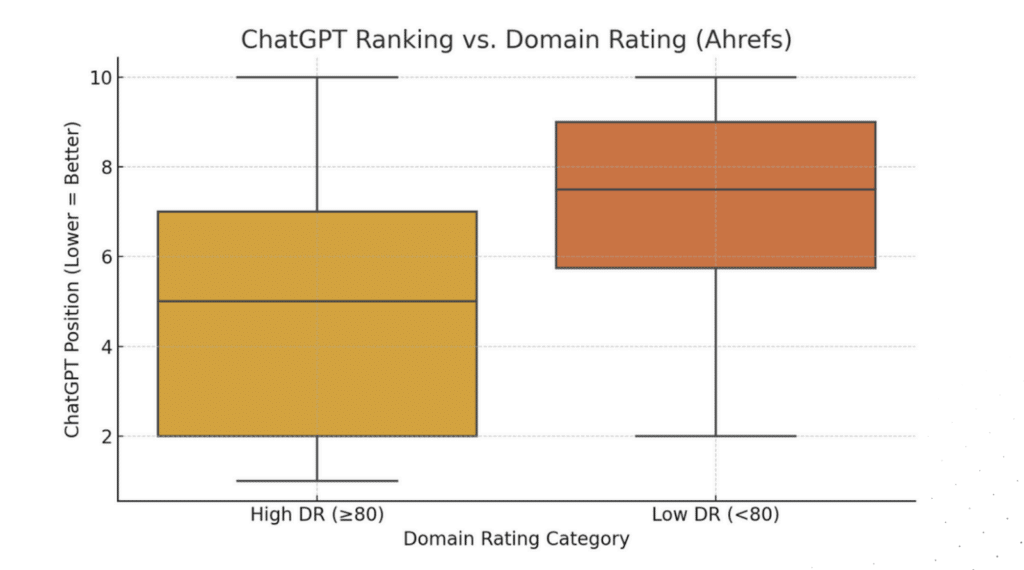

Does high DR mean high ChatGPT ranking?

Backlink authority is a core signal in traditional SEO but does it matter in LLM rankings?

Our analysis of Domain Rating (Ahrefs) showed a clear pattern: tools with stronger backlink profiles consistently ranked better in ChatGPT responses. We found a moderate correlation of -0.40 between Domain Rating and ChatGPT ranking – the strongest among all signals we tested. Tools with a DR of 80 or above had an average rank of 4.97, while those with a DR below 80 averaged a significantly lower ranking of 7.04.

Takeaway: Moderate correlation: Higher DR is linked to better ChatGPT ranking, but not guaranteed. High DR is linked to higher ChatGPT placement – more than any other signal. Strong backlink profiles may increase a tool’s perceived authority during LLM training and retrieval, making them more likely to appear in AI-generated answers, but they’re just one part of the equation.

Here’s what we uncovered after analyzing hundreds of SaaS tools and dozens of “alternatives” prompts across ChatGPT and other LLMs:

1. High review scores help – but don’t guarantee top placement.

While strong G2 and Capterra ratings may boost trust, they don’t heavily influence ranking within ChatGPT. LLMs prioritize more than just a 4.7 average.

2. More reviews give a slight edge – but low-review tools can still outrank giants.

Tools with just a few hundred reviews often appeared above those with tens of thousands. It’s not about volume alone – it’s about how and where you’re mentioned.

3. Having a Wikipedia page improves chances of ranking higher.

We found a moderate correlation between Wikipedia presence and better placement. Structured, well-referenced entries may help tools appear more legitimate to LLMs.

4. Brand search volume doesn’t strongly influence rankings.

Popular tools aren’t always prioritized. In several cases, lower-search-volume tools ranked higher, suggesting LLMs care more about authority and relevance than mass awareness.

5. Domain Rating (DR) is the most reliable SEO signal for LLMs.

DR showed the strongest correlation with ChatGPT placement. Tools with strong backlink profiles were more likely to rank higher – reinforcing the power of high-authority mentions.

6. Some tools still rank high despite weak visibility.

Even products with low DR, minimal reviews, or no Wikipedia presence made it into ChatGPT’s top results – likely due to well-placed, context-rich mentions in trusted content.

7. Relevance and coverage may outweigh popularity.

LLMs aren’t just scanning for the loudest voices. They prioritize tools that are well-described, linked in reputable sources, and aligned with the specific prompt.

G2 and Capterra reviews help with buyer credibility but they don’t guarantee LLM visibility.

Our research shows that what influences ChatGPT results is broader and more nuanced: backlink strength, mention relevance, consistent messaging, and machine-readable formatting. For a detailed guide, check out how you can get your brand into ChatGPT answers.

If you’re a B2B SaaS brand trying to show up in ChatGPT, Perplexity, or Google AI Overviews, you need more than reviews – you need LLM Optimization.

At Quoleady, we help SaaS brands:

This research gives you clarity. Now it’s time to act on it.

If you’re ready to improve your chances of being recommended by ChatGPT, Gemini, or Claude…

Let’s talk.

Let us know what you are looking to accomplish.

We’ll give you a clear direction of how to get there.

All consultations are free 🔥